|

Sangmin Bae

Graduate School of AI, KAIST Email: bsmn0223xkxkxk@gmail.com / bsmn0223xkxkxk@kaist.ac.kr Google Scholar, CV, Github, Linkedin, X |

I am currently looking for Industry Research Scientist Positions (or equivalent roles), starting in Fall 2026.

My doctoral research has been driven by two primary keywords: Efficiency and Multimodality.

I have extensive experience developing Scalable and Efficient Foundation Language Models. I also have proposed novel Adaptive Computation methodologies that significantly boost Inference-Efficiency. Furthermore, my expertise covers various modalities--including Vision, Audio, and Tabular data--where I focus on enhancing Training- and Data-Efficiency.

I am recently focusing on specific topics such as Knowledge Distillation, Agentic AI, Speculative Decoding, and Linear Attention.

NewsOct. 2025: 🦋 Start Postdoc research at KAIST AI.

Sep. 2025: 🎊 A paper on 'Mixture-of-Recursions' accepted at NeurIPS 2025.

Aug. 2025: 🎊 A paper on 'Contrastive Decoding' accepted at TMLR 2025.

Jun. 2025: 👨🎓 Successfully defended PhD dissertation.

Jun. 2025: ![]() Won $30,000 Google Grant Project.

Won $30,000 Google Grant Project.

Education

- Ph.D. in Graduate School of AI, KAIST. Advised by Se-Young Yun. Mar. 2021 - Aug. 2025

- M.S. in Industrial and Systems Engineering, KAIST. Advised by Se-Young Yun. Mar. 2019 - Feb. 2021

- B.S. in Industrial and Systems Engineering, KAIST. Mar. 2014 - Feb. 2019

Publications Google Scholar *: 1st co-authors, †: corresponding authors, C: conferences, J: journals, W: workshops, P: preprints, D: dissertation

|

[P4] Sangmin Bae, Bilge Acun, Haroun Habeeb, Seungyeon Kim, Chien-Yu Lin, Liang Luo, Junjie Wang, Carole-Jean Wu. Hybrid Architectures for Language Models: Systematic Analysis and Design Insights. Preprint 2025. [pdf] |

|

[P3] Bilge Acun*, Prasoon Sinha*, Newsha Ardalani, Sangmin Bae, Alicia Golden, Chien-Yu Lin, Meghana Madhyastha, Fei Sun, Neeraja J. Yadwadkar, Carole-Jean Wu. Composer: A Search Framework for Hybrid Neural Architecture Design. Preprint 2025. [pdf] |

|

[W6] Youngrok Park*, Hojung Jung*, Sangmin Bae, Se-Young Yun†. Temporal Alignment Guidance: On-manifold Sampling in Diffusion Models. Neural Information Processing Systems Workshop on Structured Probabilistic Inference and Generative Modeling (NeurIPSW) 2025. |

|

[D] Sangmin Bae. Accelerating Large Language Model Inference via Early-Exiting Algorithms. PhD Dissertation 2025. [pdf] |

|

[C17] Sangmin Bae*, Yujin Kim*, Reza Bayat*, Sungnyun Kim, Jiyoun Ha, Tal Schuster, Adam Fisch, Hrayr Harutyunyan, Ziwei Ji, Aaron Courville†, Se-Young Yun†. Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Computation. Conference on Neural Information Processing Systems (NeurIPS) 2025. [pdf] [code] |

|

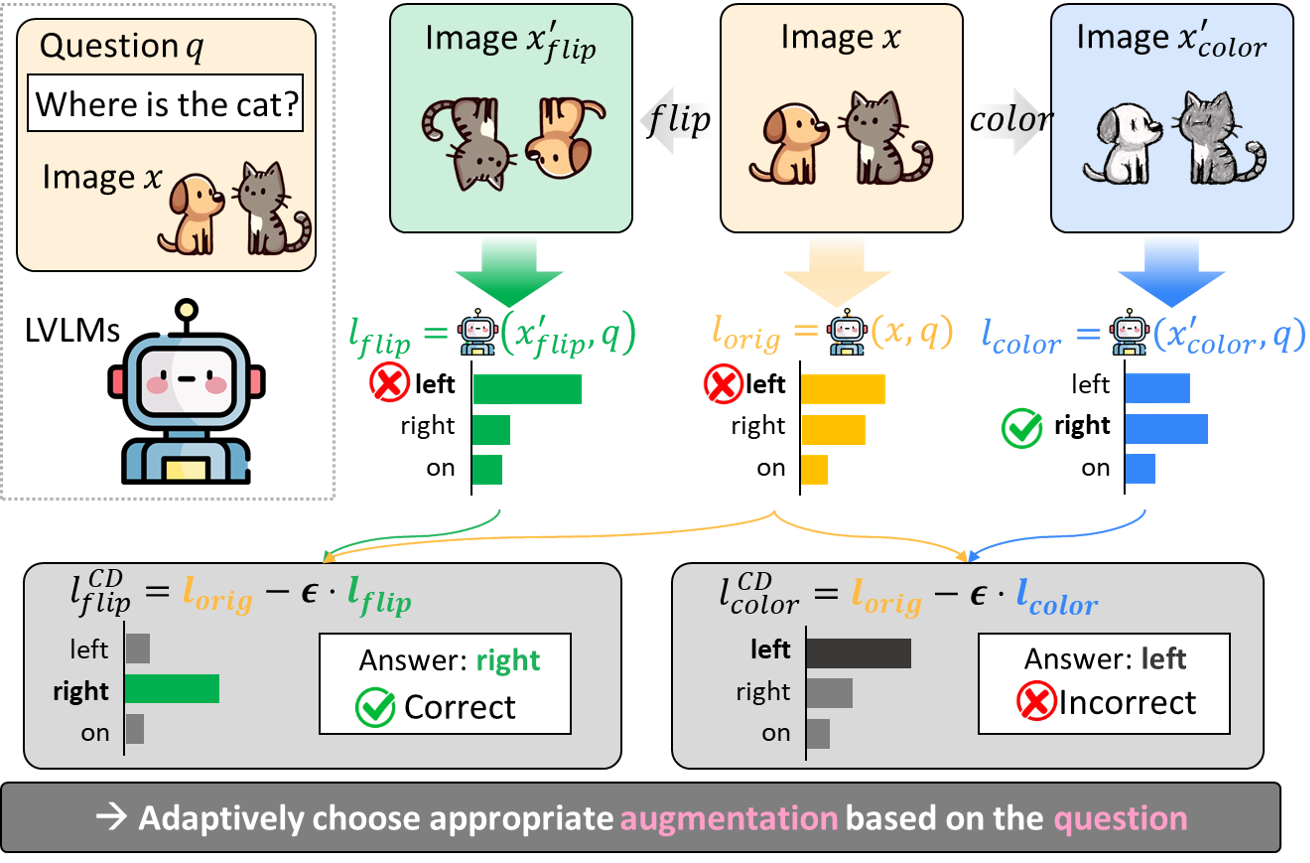

[J1] Sihyeon Kim*, Boryeong Cho*, Sangmin Bae, Sumyeong Ahn†, Se-Young Yun†. VSCoDe: Visual-Augmentation Selection for Contrastive Decoding. Transactions on Machine Learning Research (TMLR) 2025. [pdf] |

|

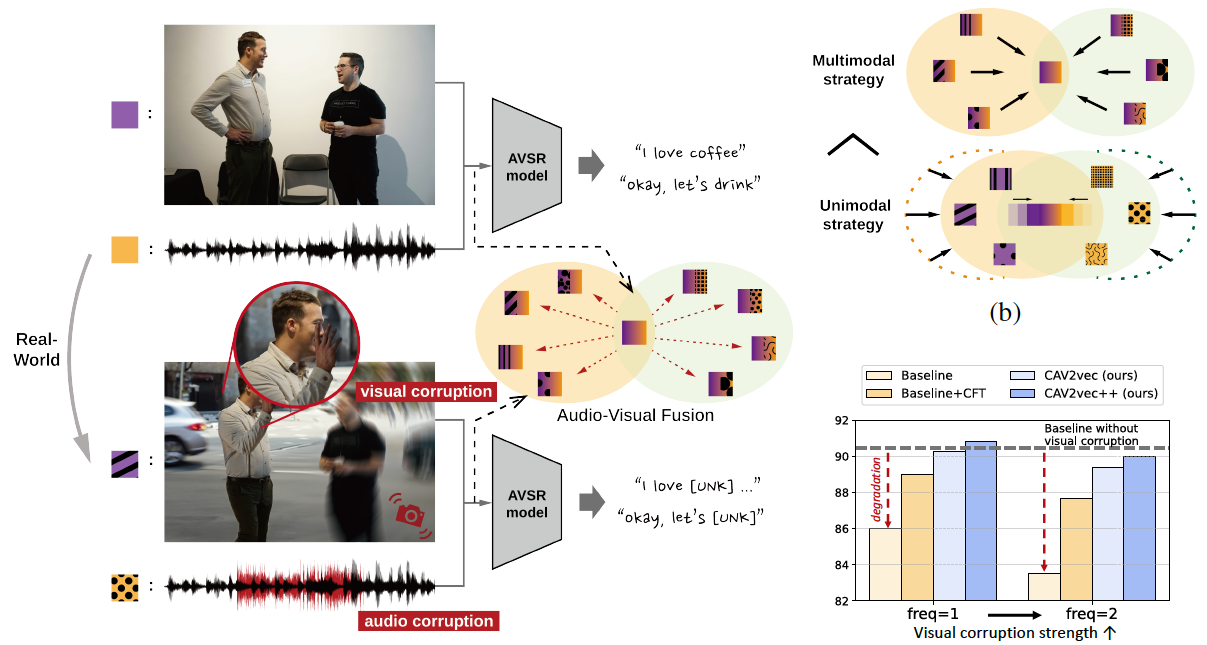

[C16] Sungnyun Kim, Kangwook Jang, Sangmin Bae, Sungwoo Cho, Se-Young Yun†. MoHAVE: Mixture of Hierarchical Audio-Visual Experts for Robust Speech Recognition. International Conference on Machine Learning (ICML) 2025. [pdf] |

|

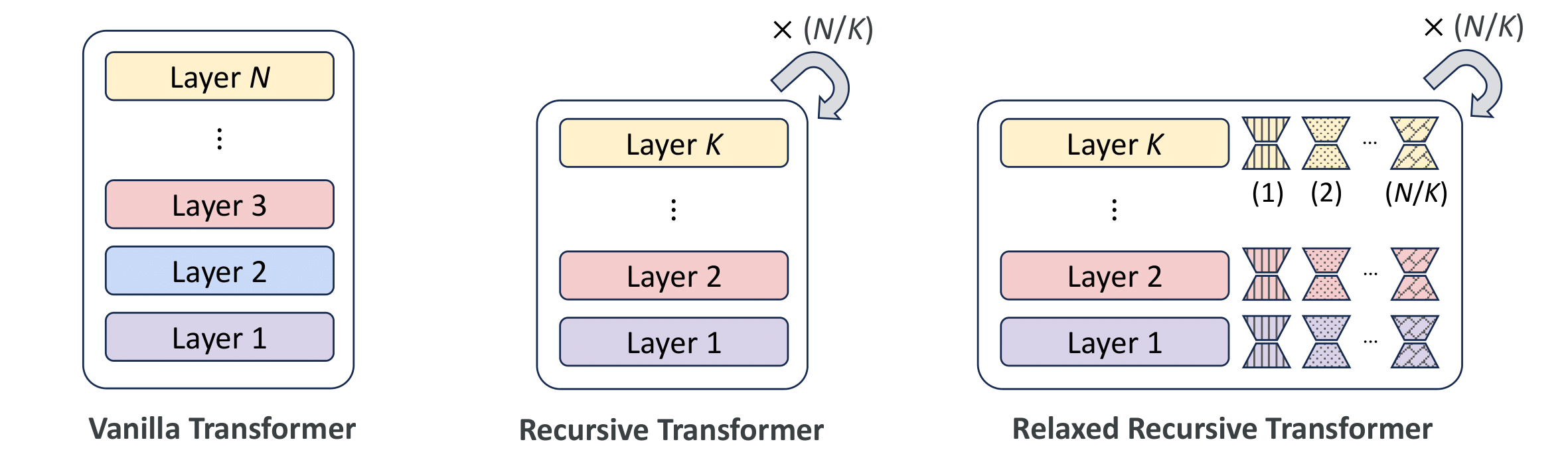

[C15] Sangmin Bae, Adam Fisch, Hrayr Harutyunyan, Ziwei Ji, Seungyeon Kim, Tal Schuster†. Relaxed Recursive Transformers: Effective Parameter Sharing with Layer-wise LoRA. International Conference on Learning Representations (ICLR) 2025. [pdf] |

|

[C14] Sungnyun Kim, Sungwoo Cho, Sangmin Bae, Kangwook Jang, Se-Young Yun†. Multi-Task Corrupted Prediction for Learning Robust Audio-Visual Speech Representation. International Conference on Learning Representations (ICLR) 2025. [pdf] [code] |

|

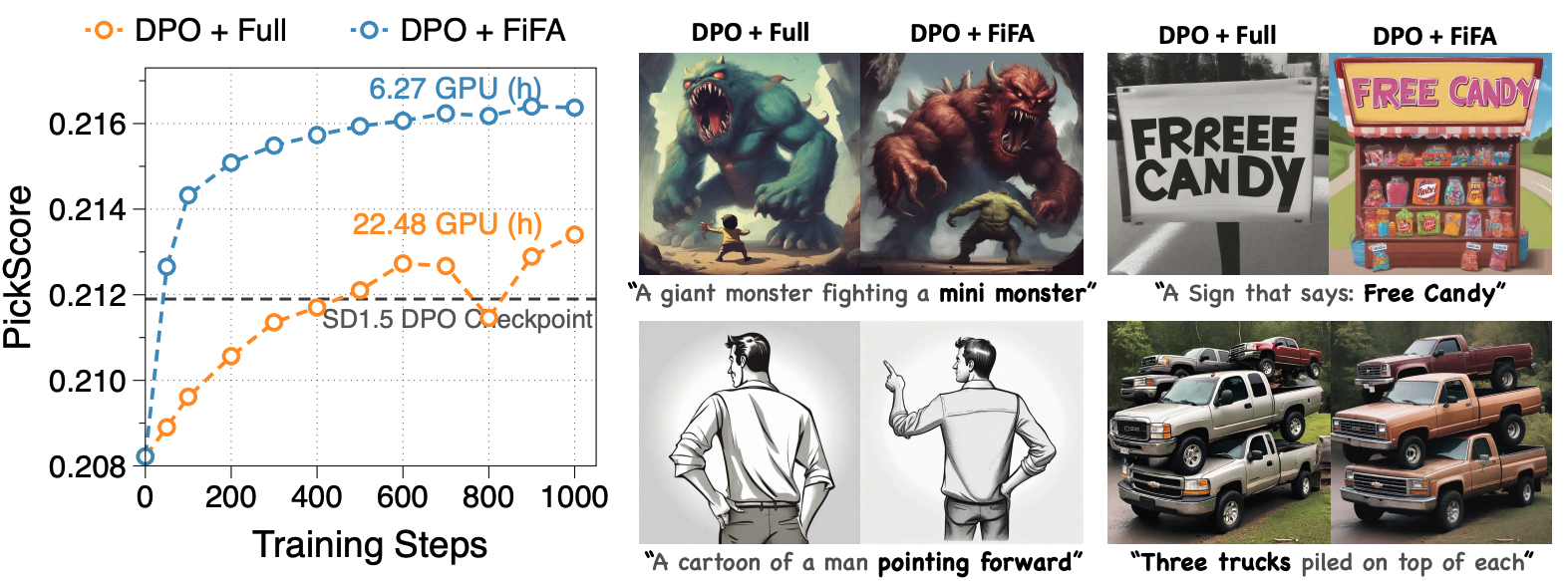

[C13] Yongjin Yang*, Sihyeon Kim*, Hojung Jung, Sangmin Bae, SangMook Kim, Se-Young Yun†, Kimin Lee†. Automated Filtering of Human Feedback Data for Aligning Text-to-Image Diffusion Models. International Conference on Learning Representations (ICLR) 2025. [pdf] |

|

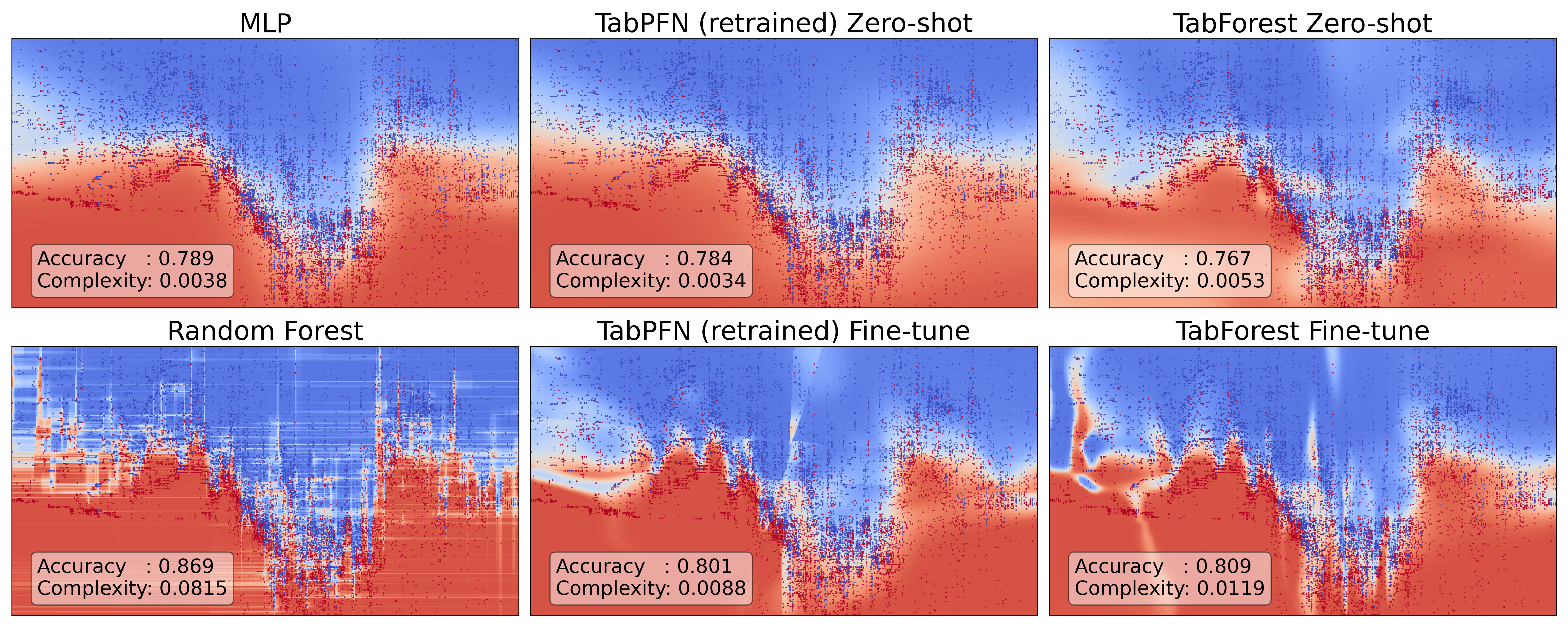

[P2] Felix den Greejen*, Sangmin Bae, Stephen Cha, Se-Young Yun†. Fine-tuned In-Context Learning Transformers are Excellent Tabular Data Classifiers. Preprint 2024. [pdf] [code] |

|

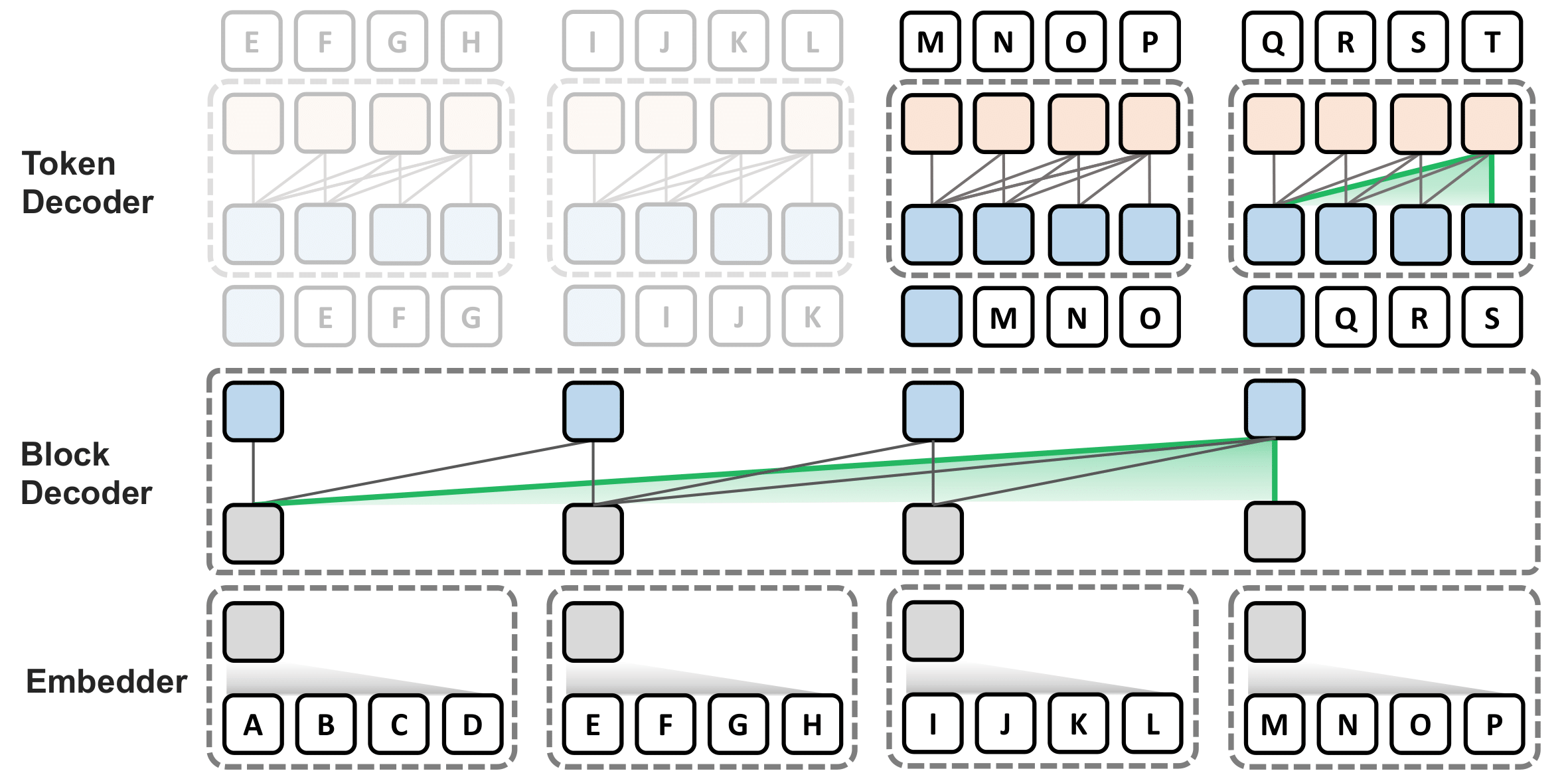

[C12] Namgyu Ho*, Sangmin Bae*, Taehyeon Kim, Hyunjik Jo, Yireun Kim, Tal Schuster, Adam Fisch, James Thorne†, Se-Young Yun†. Block Transformer: Global-to-Local Language Modeling for Fast Inference. Conference on Neural Information Processing Systems (NeurIPS) 2024. [pdf] [code] |

|

[C11] Sungnyun Kim*, Kangwook Jang*, Sangmin Bae, Hoirin Kim†, Se-Young Yun†. Learning Video Temporal Dynamics with Asymmetric Cross-Modal Attention for Robust Audio-Visual Speech Recognition. IEEE Spoken Language Technology Workshop (SLT) 2024. [pdf] |

|

[C10] Yunseon Choi, Sangmin Bae, Seonghyun Ban, Minchan Jeong, Chuheng Zhang, Lei Song, Li Zhao, Jiang Bian, Kee-Eung Kim†. Hard Prompts Made Interpretable: Sparse Entropy Regularization for Prompt Tuning with RL. The Association for Computational Linguistics (ACL) 2024. Oral Presentation. [pdf] [code] |

|

[C9] June-Woo Kim, Miika Toikkanen, Sangmin Bae, Minseok Kim†, Ho-Young Jung†. RepAugment: Input-Agnostic Representation-Level Augmentation for Respiratory Sound Classification. International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 2024. [pdf] |

|

[C8] Yujin Kim, Jaehong Yoon, Seonghyeon Ye, Sangmin Bae, Namgyu Ho, Sung Ju Hwang†, Se-Young Yun†. Carpe diem: On the Evaluation of World Knowledge in Lifelong Language Models. Conference of the North American Chapter of the Association for Computational Linguistics (NAACL) Long Paper 2024. [pdf] [code] |

|

[C7] June-Woo Kim, Sangmin Bae, Won-Yang Cho, Byungjo Lee, Ho-Young Jung†. Stethoscope-guided Supervised Contrastive Learning for Cross-domain Adaptation on Respiratory Sound Classification. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2024. [pdf] [code] |

|

[W5] June-Woo Kim, Chihyeon Yoon, Miika Toikkanen, Sangmin Bae, Ho-Young Jung†. Adversarial Fine-tuning using Generated Respiratory Sound to Address Class Imbalance. Neural Information Processing Systems Workshop on Deep Generative Models for Health (NeurIPSW) 2023. [pdf] [code] |

|

[W4] Felix den Breejen, Sangmin Bae, Stephen Cha, Tae-Young Kim, Seoung-Hyun Koh, Se-Young Yun†. Exploring the Retrieval Mechanism for Tabular Deep Learning. Neural Information Processing Systems Workshop on Table Representation Learning (NeurIPSW) 2023. [pdf] |

|

[C6] Sangmin Bae*, Jongwoo Ko*, Hwanjun Song†, Se-Young Yun†. Fast and Robust Early-Exiting Framework for Autoregressive Language Models with Synchronized Parallel Decoding. Conference on Empirical Methods in Natural Language Processing (EMNLP) Long Paper 2023. [pdf] [code] |

|

[C5] Sangmin Bae*, June-Woo Kim*, Won-Yang Cho, Hyerim Baek, Soyoun Son, Byungjo Lee, Changwan Ha, Kyongpil Tae, Sungnyun Kim†, Se-Young Yun†. Patch-Mix Contrastive Learning with Audio Spectrogram Transformer on Respiratory Sound Classification. Conference of the International Speech Communication Association (INTERSPEECH) 2023. [pdf] [code] |

|

[C4] Sungnyun Kim*, Sangmin Bae*, Se-Young Yun†. Coreset Sampling from Open-Set for Fine-Grained Self-Supervised Learning. International Conference on Computer Vision and Pattern Recognition (CVPR) 2023. [pdf] [code] |

|

[C3] Sangmook Kim*, Sangmin Bae*, Hwanjun Song†, Se-Young Yun†. Re-thinking Federated Active Learning based on Inter-class Diversity. International Conference on Computer Vision and Pattern Recognition (CVPR) 2023. [pdf] [code] |

|

[C2] Sangmin Bae*, Sungnyun Kim*, Jongwoo Ko, Gihun Lee, Seungjong Noh, Se-Young Yun†. Self-Contrastive Learning: Single-viewed Supervised Contrastive Framework using Sub-network. The Association for the Advancement of Artificial Intelligence (AAAI) 2023. Oral Presentation. [pdf] [code] |

|

[C1] Gihun Lee*, Minchan Jeong*, Yongjin Shin, Sangmin Bae, Se-Young Yun†. Preservation of Global Knowledge by Not-True Distillation in Federated Learning. Neural Information Processing Systems (NeurIPS) 2022. [pdf] [code] |

|

[W3] Sangmook Kim*, Sangmin Bae*, Hwanjun Song†, Se-Young Yun†. LG-FAL: Federated Active Learning Strategy using Local and Global Models. International Conference on Machine Learning Workshop on Adaptive Experimental Design and Active Learning in the Real World (ICMLW) 2022. [pdf] |

|

[W2] Sungnyun Kim*, Gihun Lee*, Sangmin Bae*, Se-Young Yun†. MixCo: Mix-up Contrastive Learning for Visual Representation. Neural Information Processing Systems Workshop on Self-Supervised Learning: Theory and Practice (NeurIPSW) 2020. [pdf] [code] |

|

[P1] Taehyeon Kim*, Sangmin Bae*, Jin-woo Lee, Se-Young Yun†. Accurate and Fast Federated Learning via Combinatorial Multi-Armed Bandits. Preprint 2020. [pdf] |

|

[W1] Gihun Lee*, Sangmin Bae*, Jaehoon Oh, Se-Young Yun†. SIPA: A Simple Framework for Efficient Networks. IEEE International Conference on Data Mining Workshop on Big Data Analysis for Smart Engergy (ICDMW) 2020. [pdf] [code] |

Patents

-

[P4] Adam Fisch, Tal Schuster, Hrayr Harutyunyan, Ziwei Ji, Seungyeon Kim, Sangmin Bae. Efficient Decoding of Output Sequences Using Parameter

Sharing. US Patent Application. Oct. 2024 -

[P3] Se-Young Yun, Seongyoon Kim, Woojin Chung, Sangmin Bae. Toward Enhanced Representation for Federated Re-Identification by Not-True Self

Knowledge Distillation. US Patent Application. Apr. 2024 -

[P2] Jaehoon Oh, Sangmook Kim, Se-Young Yun, Sangmin Bae, Jaewoo Shin, Seongyoon Kim, Woojin Chung. Federated Learning System for

Performing Individual Data Customized Federated Learning, Method for Federated Learning, and Client Aratus for Performing Same. US Patent

Application. May 2023 -

[P1] Gihun Lee, Minchan Jeong, Se-Young Yun, Sangmin Bae, Jaeyeon Ahn, Seongyoon Kim, Woojin Chung. System, Method, Computer-Readable

Storage Medium and Computer Program for Federated Learning of Local Model based on Learning Direction of Global Model. US Patent Application.

May 2023

Awards and Honors

- Selected Proposal for Research Project with LG AI Research. Dec. 2025

- InnoCORE-LLM Postdoctoral Fellowship. Jul. 2025

- $30,000 for Google Research Grant Project. Jun. 2025

- $3,000 for Google Conference Scholarship Program. Aug. 2025

- $30,000 in Google Cloud Grants from Google Cloud Platform (GCP). Aug. 2024

- Silver Award in Signal Processing from Samsung Humantech Paper Awards. Jan. 2024

- Two Best Presentation Awards from Korea Computing Congress (KCC). Aug. 2022

- Best Paper Award (5th Place) from Korean AI Association and LG AI Research (JKAIA). Nov. 2021

- MicroNet Challenge 4th Place at NeurIPS Workshop. Oct. 2019

- Alumni Scholarship from KAIST. Mar. 2017 - Feb. 2019

- Dean's List (Top 3%) at Faculty of Engineering Department in KAIST. Spring 2017

Research Experience

- Postdoctoral Research at KAIST AI, working with Se-Young Yun. Sep. 2025 - Present

- Research Internship at Meta FAIR, advised by Carole-Jean Wu and Bilge Acun. May 2025 - Sep. 2025

- Research Internship at Google DeepMind, advised by Tal Schuster and Adam Fisch. May 2024 - Aug. 2024

- Research Collaboration with MODULABS. Sep. 2022 - Jan. 2024

- Research Collaboration with NAVER AI, advised by Hwanjun Song. Jan. 2022 - Jan. 2023

- Research Internship at Kakao Recommendation Team. Sep. 2018 - Feb. 2019

- Research Internship at Optimization and Statistical Inference Lab, KAIST. Jul. 2018 - Aug. 2018

- Research Internship at Human Factors and Ergonomics Lab, KAIST. Dec. 2017 - Jun. 2018

- Exchange Student at Linköping University. Jul. 2017 - Aug. 2017

Research Projects

- [LG AI Research] Strategic Sub-agents and Hybrid Architectures for Agent Workflows. Project Manager. Jan. 2025 - Present

- [Samsung Research] LLM Compression Project. Oct. 2025 - Present

- [Google] Google Research Grant Project. Project Manager. Jun. 2025 - Present

- [IITP] National AI Research Lab. Project Manager. Nov. 2024 - Sep. 2025

- [KT] Efficient Large Language Model Inference Algorithm. Sep. 2024 - Oct. 2024

- [NIER] Short-term Prediction of Particulate Matter via Artificial Intelligence. Project Manager. Mar. 2023 - May. 2024

- [KT] Neural Architecture Search for Detecting Communication Network Failure. Project Manager. Apr. 2022 - Feb. 2023

- [ETRI] Lightweight Edge Device Technology via Federated Learning. Project Manager. Mar. 2021 - Sep. 2022

- [SK Hynix] Semantic Segmentation to Detect Errors in Wafer Process. Feb. 2021 - Sep. 2021

- [ETRI] Data-efficient Unsupervised Representation Learning. Mar. 2020 - Dec. 2020

- [ETRI] Model Compression for Big Data Ddge Analysis. Jun. 2019 - Oct. 2019

- [Hankook Tire and Technology] Compound Prediction with Artificial Intelligence and Auto-ML. Mar. 2019 - Feb. 2020

Services

- Server Manager at KAIST AI. Mar. 2021 - Feb. 2023

- Student Leader at OSI Lab, KAIST. Mar. 2021 - Mar. 2022

- Teaching Assistant.

- − KAIST AI505 Optimization for AI. Fall 2021, Fall 2022, Fall 2023

- − KAIST AI603 Machine Learning Theory . Spring 2021, Spring 2023

- − KAIST AI611 Deep Reinforcement Learning. Spring 2022

- Instructor on DL and ML courses.

- − MetaCode: Machine Learning Course. [video] (views 120K) Jun. 2021 - Dec. 2022

- − ForumM: Recommendation Seminar. Nov. 2022

- − LG-KAIST AI: Computer Vision Course. Oct. 2020, Oct. 2021

- − Korea Blockchain Institute: Machine Learning Course. Dec. 2020

- − Samsung DS: Deep Learning Course. Jul. 2020

- Academic Peer Reviewer. Jan. 2024 - Present

© 2023 Sangmin Bae Thanks Dr. Hwanjun Song for the template.